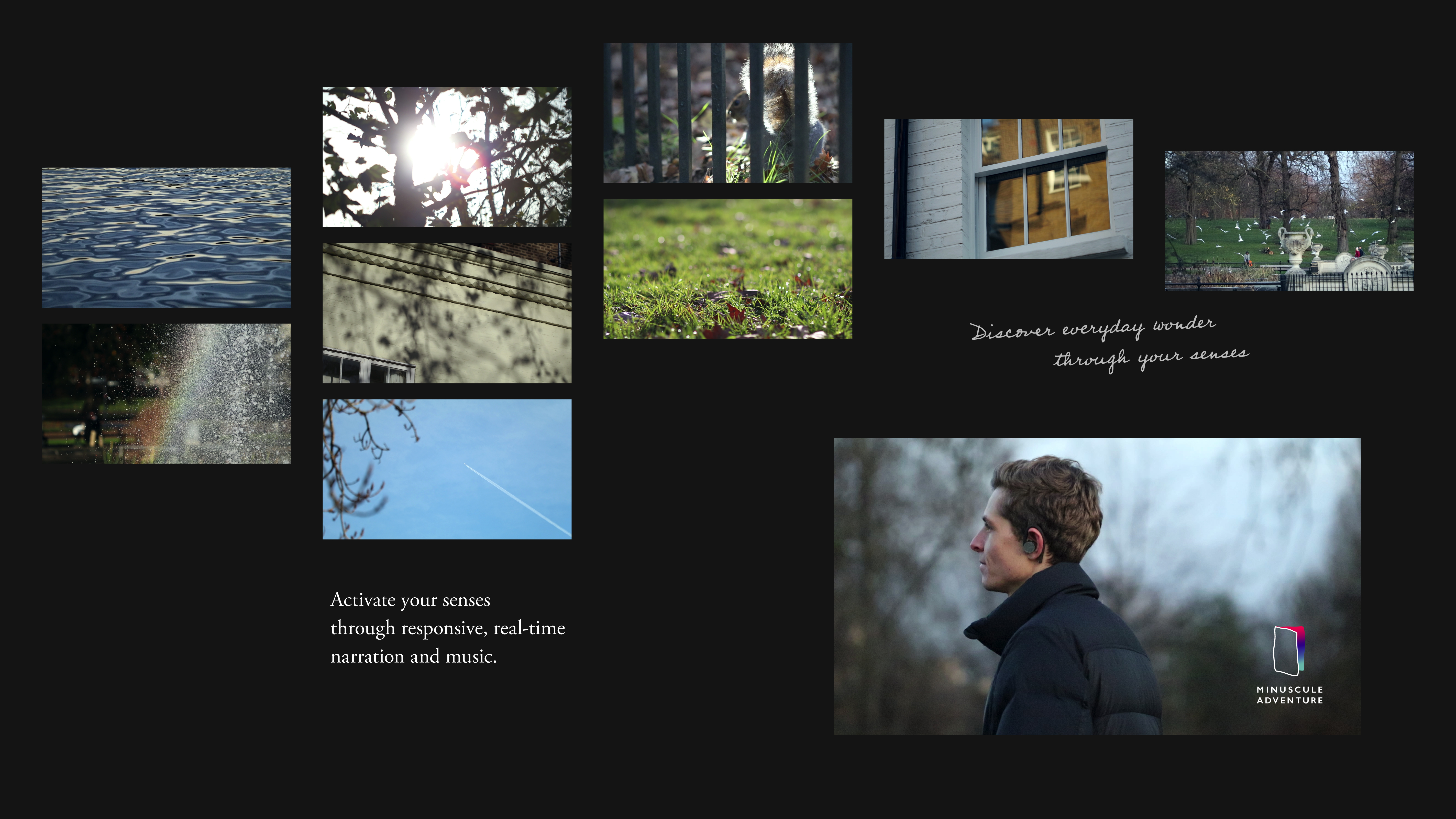

How can we activate our senses and be more present?

Minuscule Adventure

An audio experience using responsive, real-time narration and music accessed through a wearable portal

July 2020 – Jan 2021 | with Joy Zhang, Kat Zhang, Jeremy Hulse

3 minute video, aesthetic prototype, functional web-based prototype

WHAT IS IT?

Minuscule Adventure is an audio experience accessed through a wearable portal that activates your senses through responsive, real-time narration and music. Inspired by the lack of sensory engagement during lockdown and the rapidly increasing norm of working from home, we created an immersive experience that transforms a daily walk in your neighbourhood to a minuscule adventure, to feel a deeper connection with the environment and make today different from yesterday.

My role encompassed all creative direction, experience design, video production including storyboarding, filming (all original shot footages), and editing, VI and graphic design, presentation design.

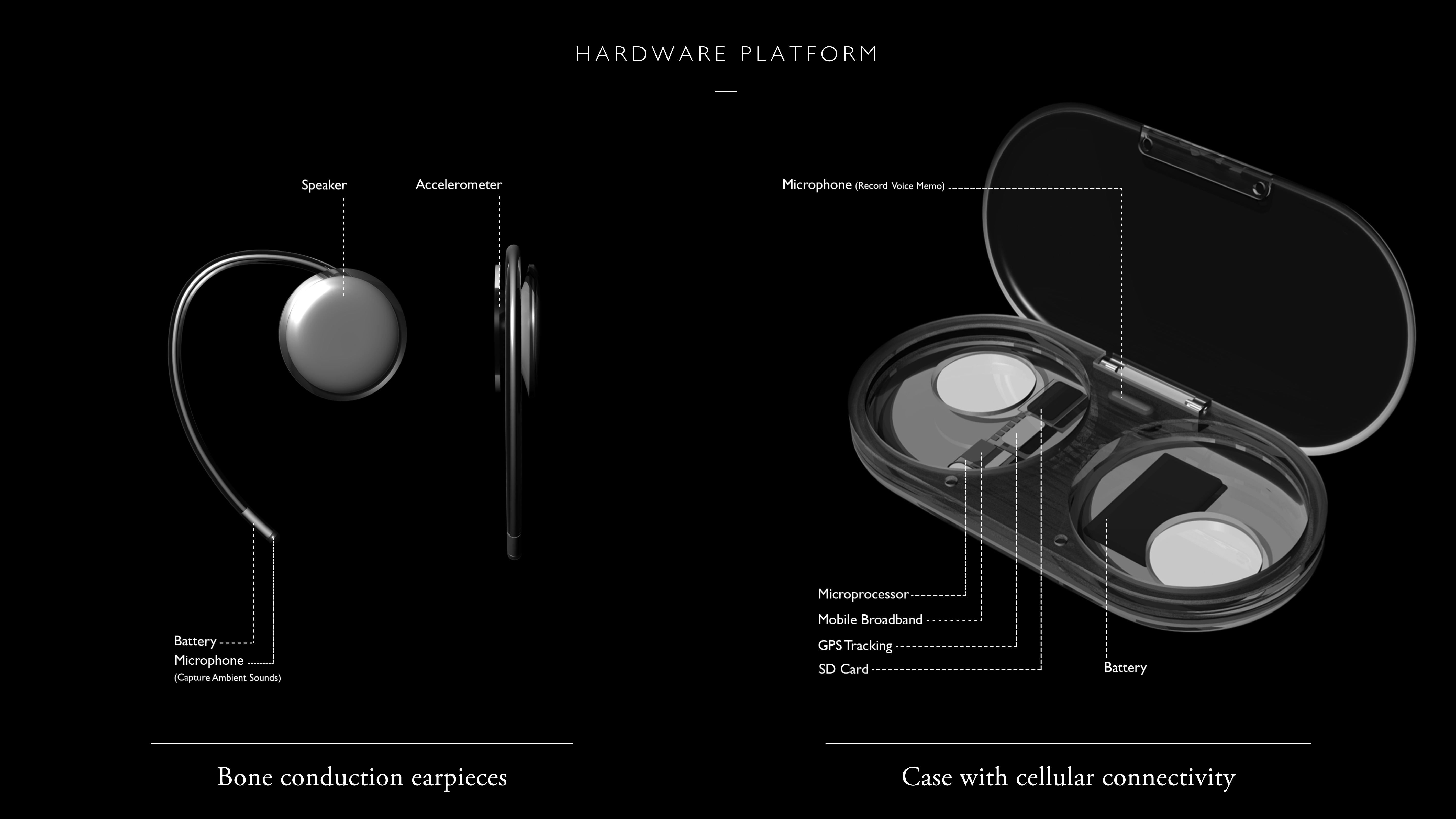

WEARABLE PORTAL

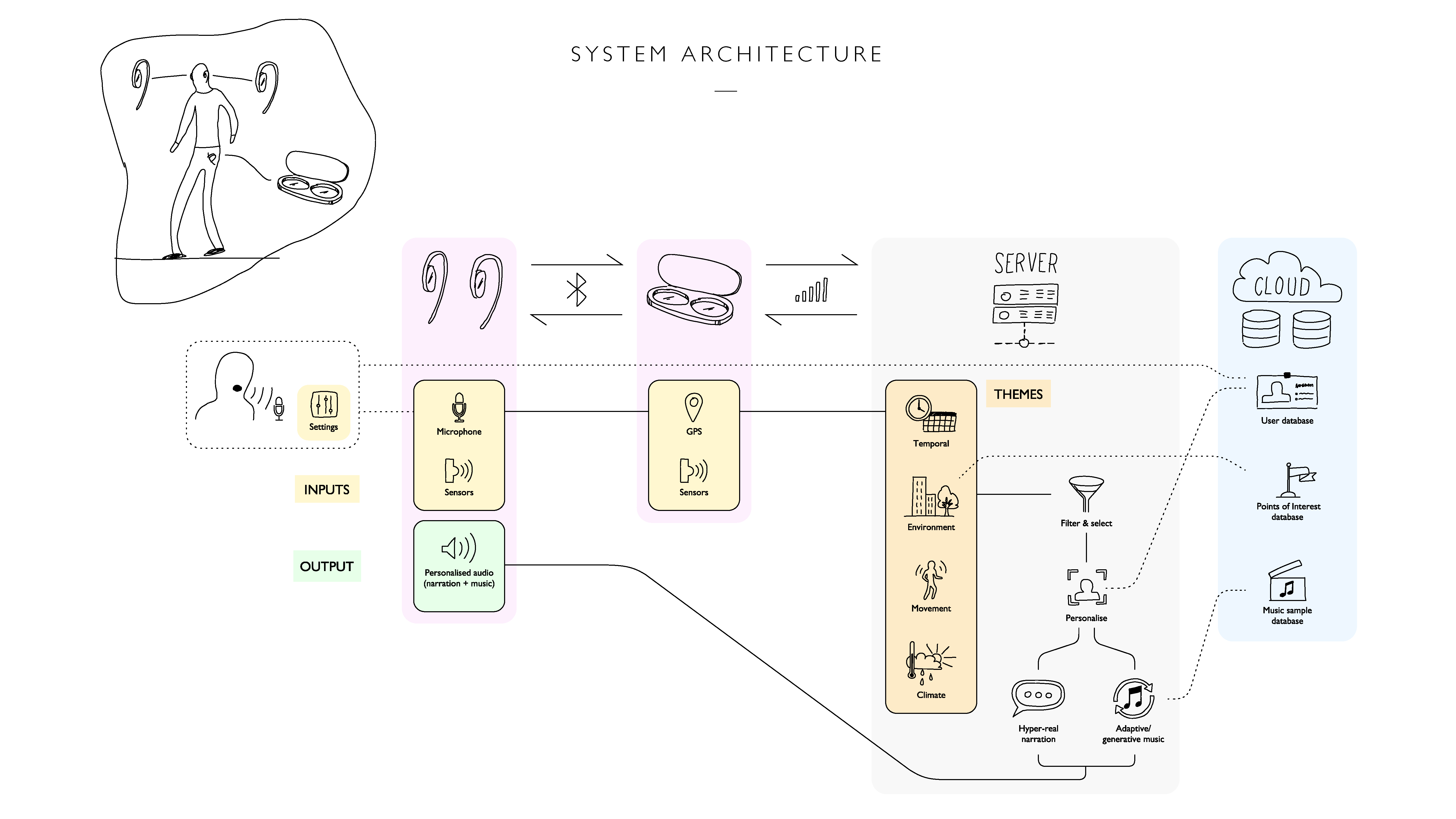

The hardware platform is developed to: 1) unplug from screens with a self-contained microprocessor, internet connectivity, GPS, and memory card; 2) activate senses using the earpieces, with embedded accelerometers and microphones to generate hyper-live inputs. The bone conduction technology allows users to continue hearing their ambient soundscape. The superimposed audio induces a perceptual shift of the environment, allowing users to direct their attention to everyday wonders around them.

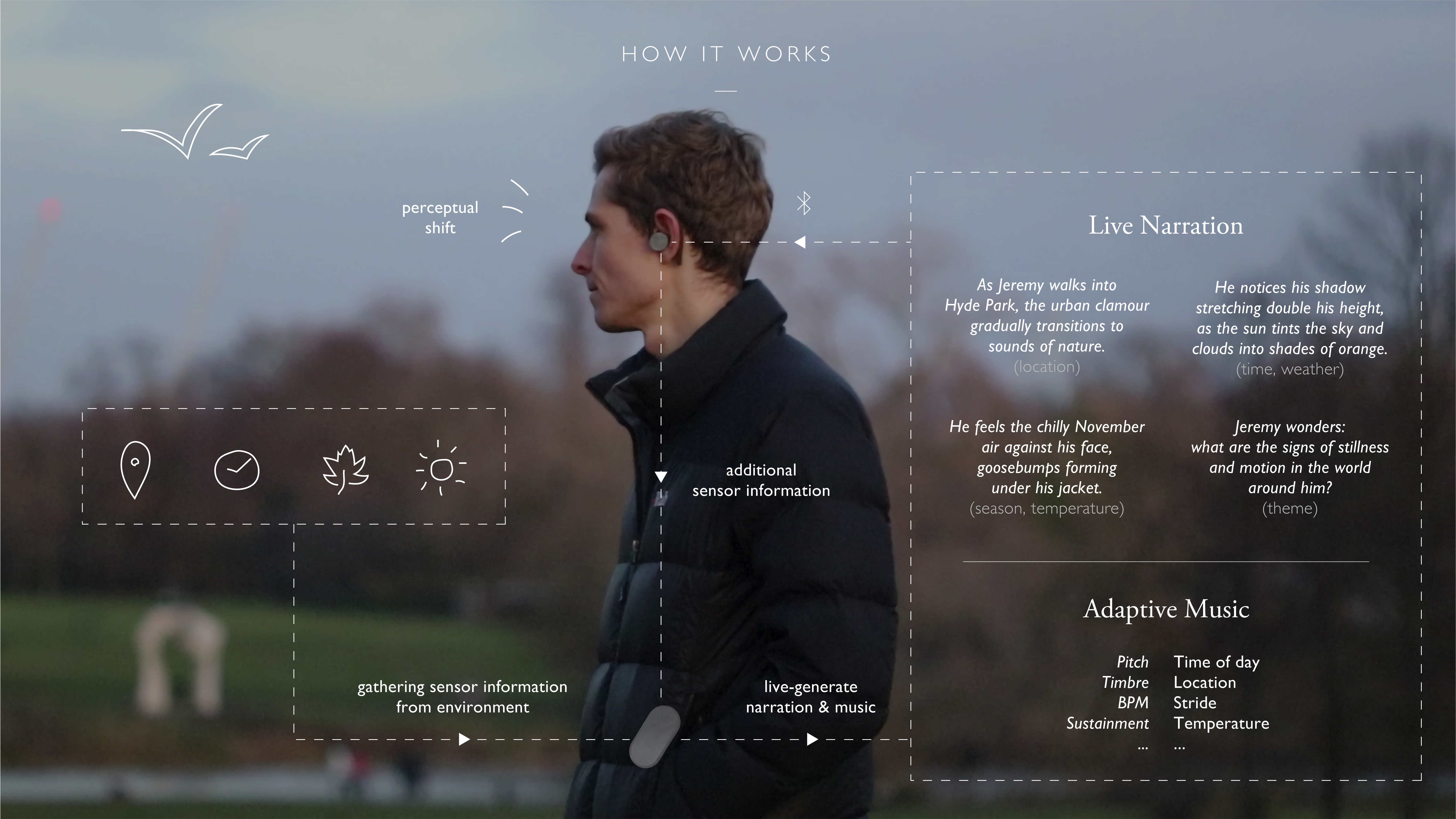

HOW IT WORKS

Sensory information such as GPS location, time, and season is collected by the case, querying to cloud-based servers for up-to-date meteorological and location based information. Additional sensors in the earpieces perceive ambient sounds of interest and the user’s movements, making the experience hyper personalised. The microprocessor onboard the case relays synthesized narration and adaptive music via Bluetooth to the bone conduction earphones.

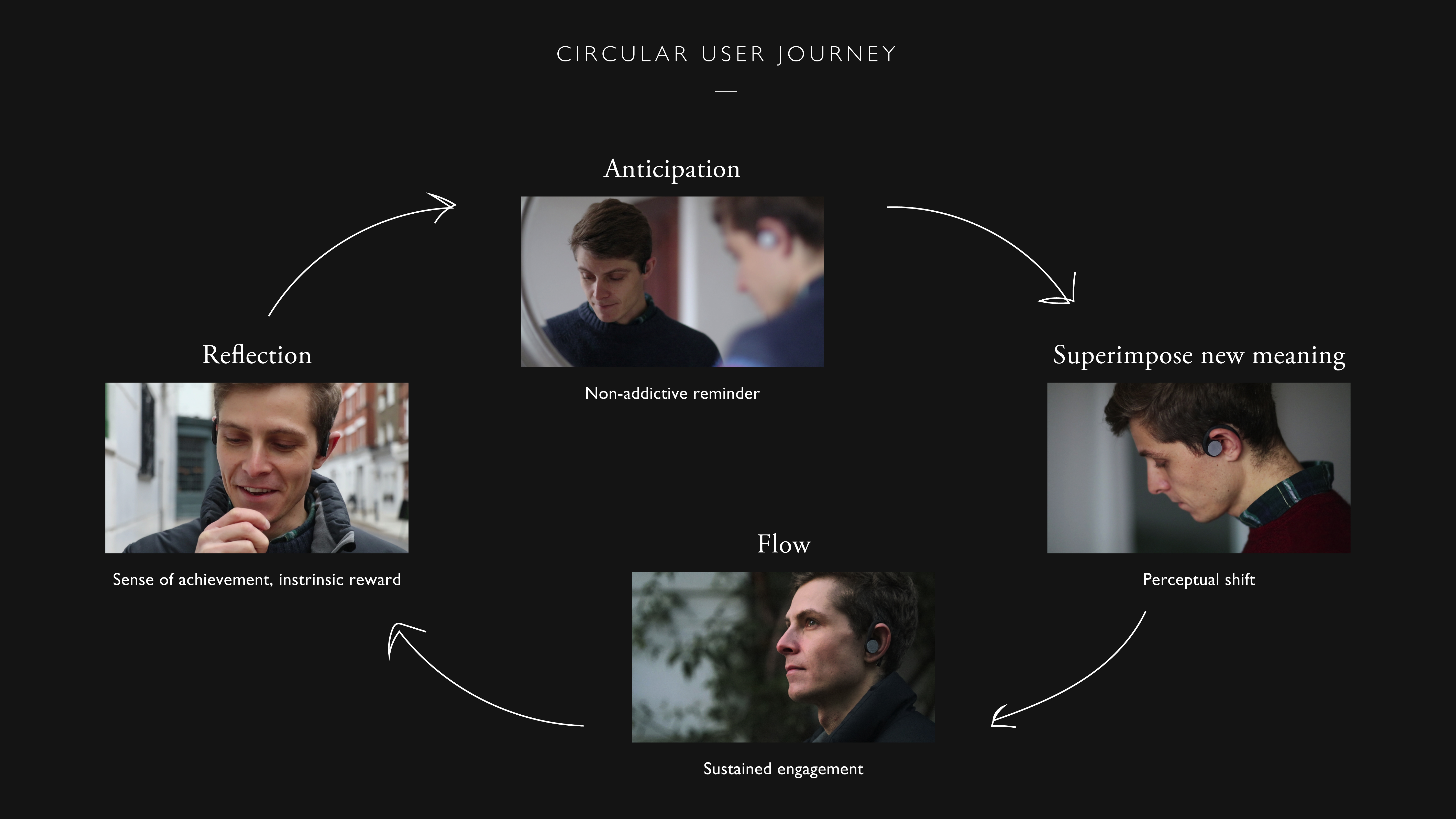

CIRCULAR USER JOURNEY

Minuscule Adventure is a comprehensive experience from beginning to end, tied together with voice recordings — recorded through the case after the walk, then sent back for the user to listen to at an arbitrary time — which act both as a reflection and reminder, helping build anticipation for the next adventure.

CUSTOMISE LEVELS

The experience has four tiers of customisation, giving users the freedom to choose the degree of immersion or self-direction. The tiers range from a simple, themed walk with minimal audio to a fully narrated walk. Adaptive music is introduced in the more immersive levels.

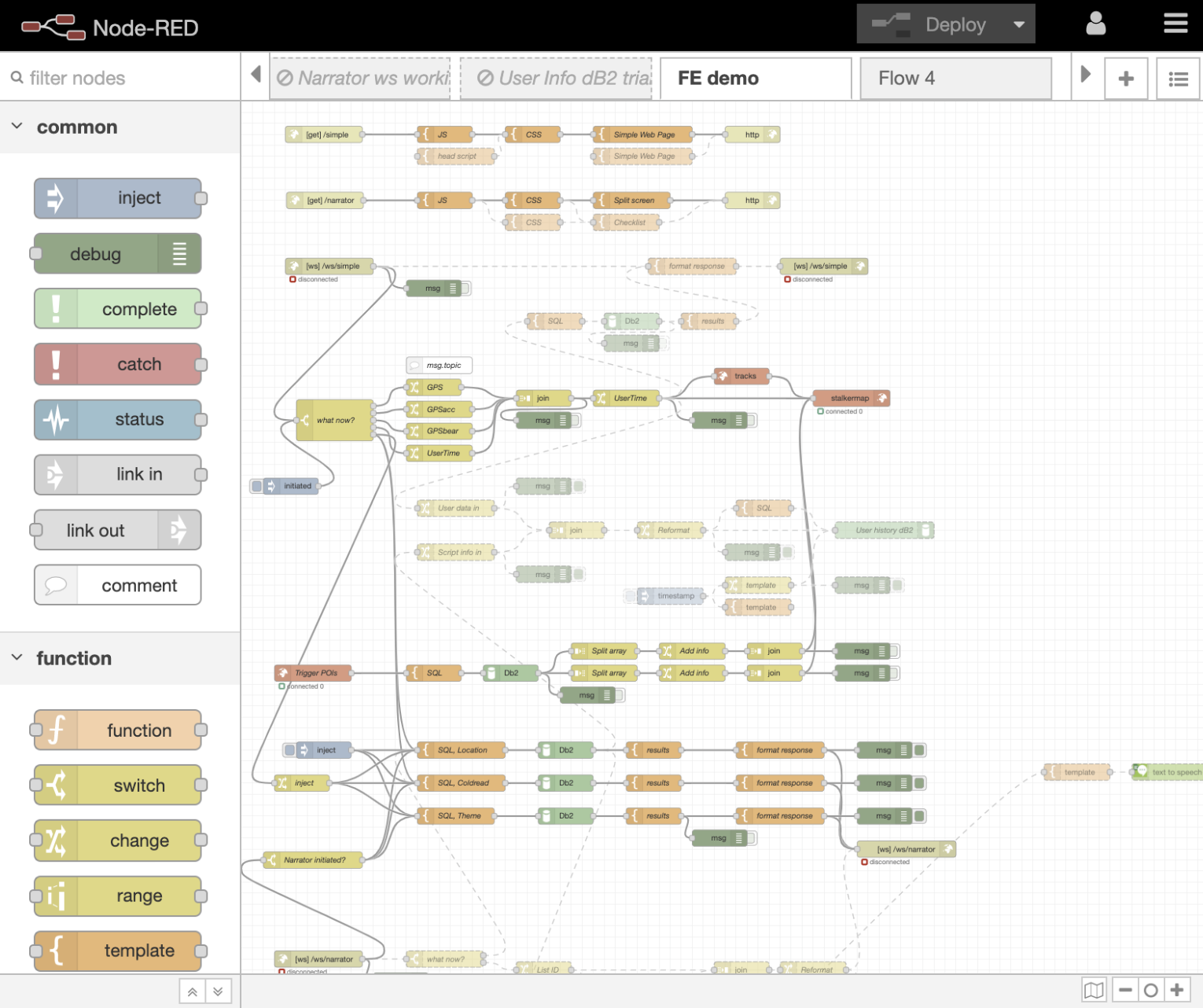

SYSTEM ARCHITECTURE

There are multiple sensors in both the earpieces and the case; the earpieces are connected via Bluetooth and the data is sent to the server via the case’s in-built mobile connection. Sensor data is processed on the server side and categorised under four main themes: temporal, environment, movement and climate. This input is combined with the user's name and personal preferences in order to generate both the personalised narration and adaptive music track, combined and relayed to the user in real time.

Minuscule Adventure’s sound design has been a collaborative process with students from the Information Experience Design course at the RCA. The adaptive music by each composer can be experienced in this video as follows:

Shangyun Wu — 0:00–2:35

Jiajing Zhao — 2:37–5:25

Taking inspiration from adaptive music in gaming, Shang has composed location-based “theme tunes” while also exploring the potential of specific agents (eg. dogs, people) to act as triggers for other musical elements.

Zhao’s adaptive style is based on the characteristics of location. Combining multiple music generating devices with various musical processors, the transition is made seamless and adaptive to arbitrary movements across zones.

David Shulman — 5:27–8:17

Dave is interested in understanding intuition, creativity and hidden senses (such as magnetoreception) through psychogeographical research. Coming from the background of a conservatoire trained musician, Dave uses live recorded audio put through filters and utilising automation data to combine ‘natural’ acoustic recorded sound with more synthetic sound design approaches. The intersection where nature and technology meets is also explored by using magnetometer data to generate ‘natural’ harmonics such as the perfect 5th.

We aimed to set up the Minuscule Adventure sound design as an API for composers to interpret and generate unique content. Jiuming Duan, a stage designer also from IED, developed the strategy and basic logic. Each composer focused on certain environmental inputs as triggers for the adaptive music, and developed their own set of creative constraints.

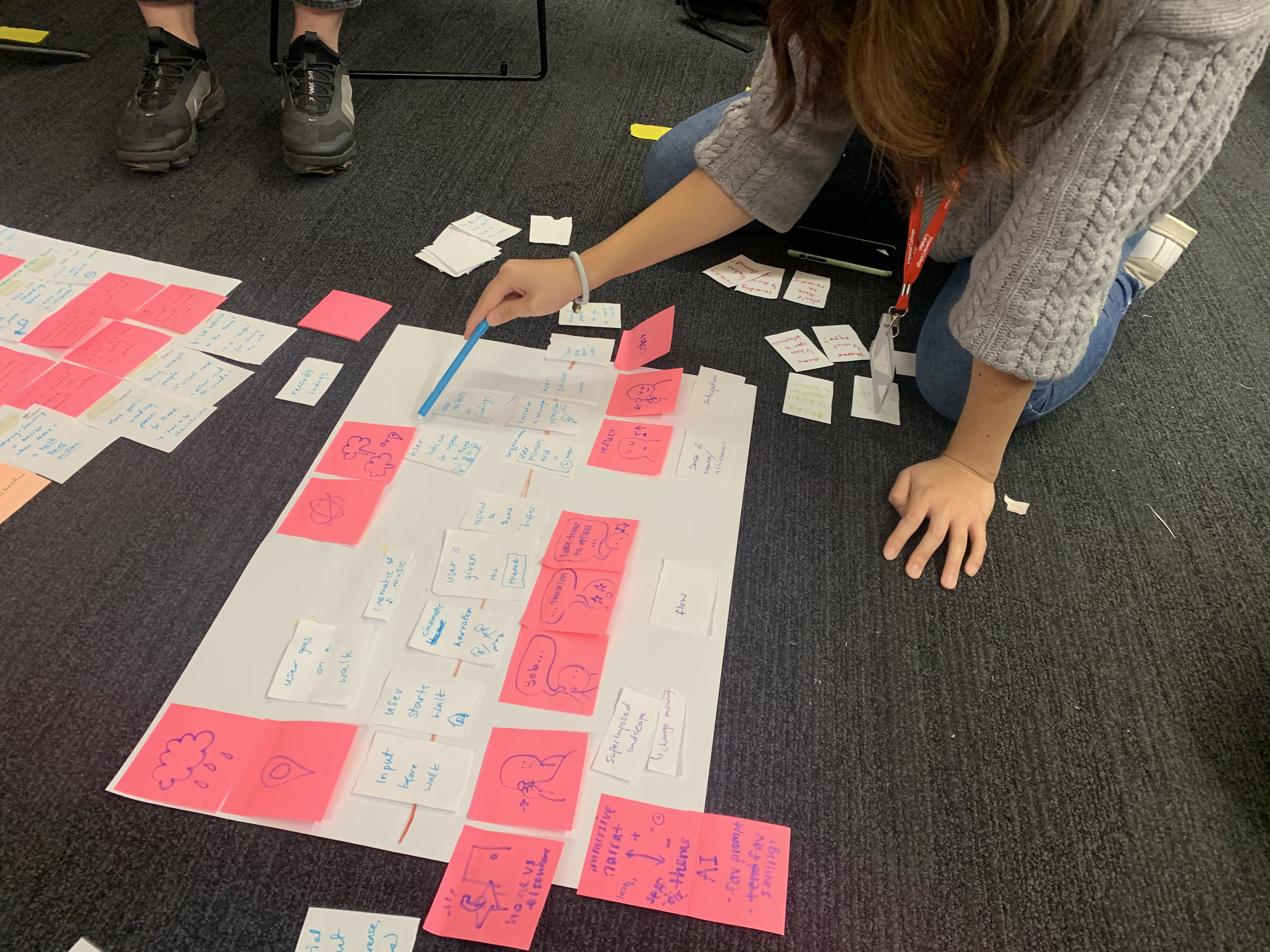

PROCESS

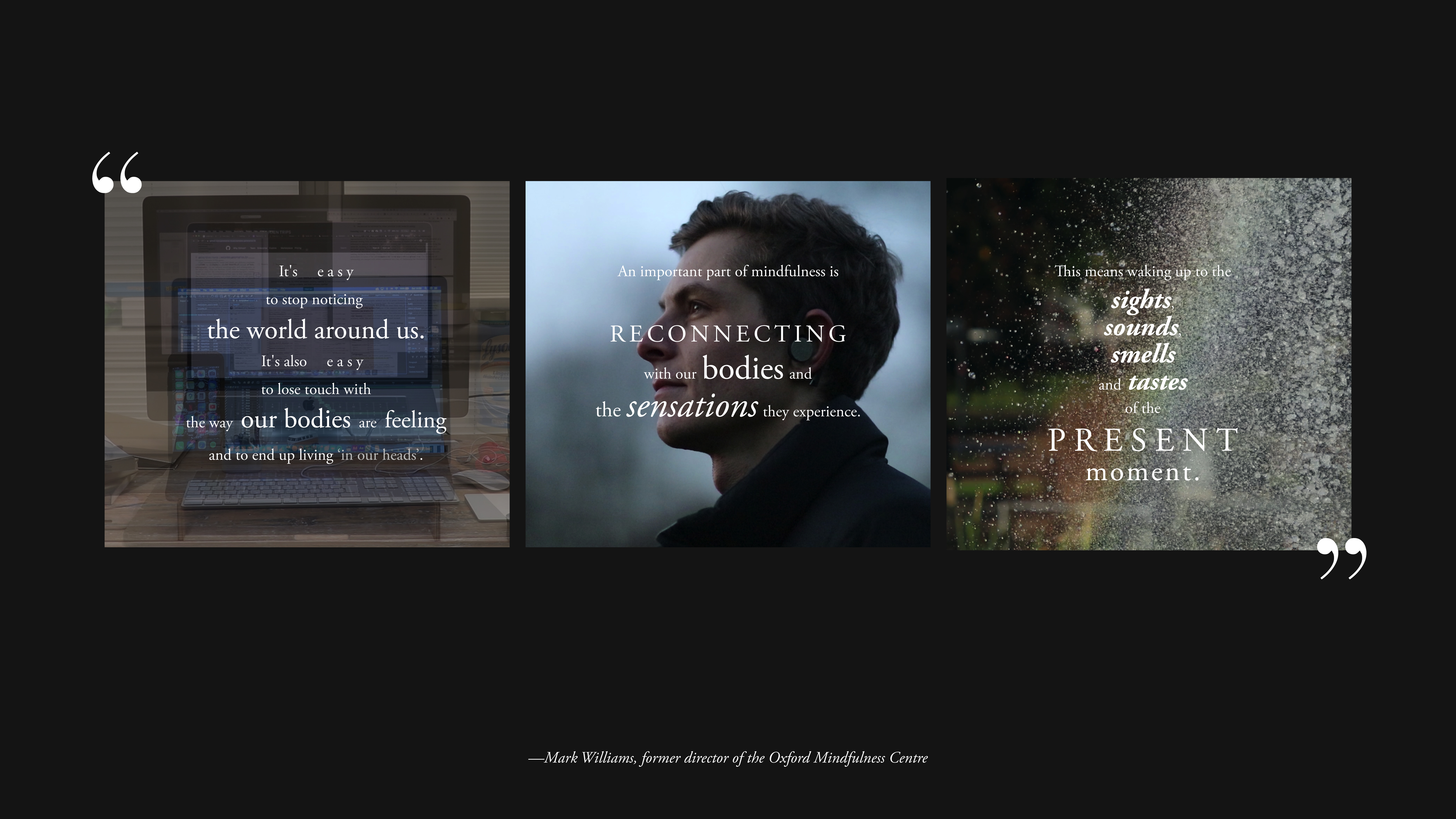

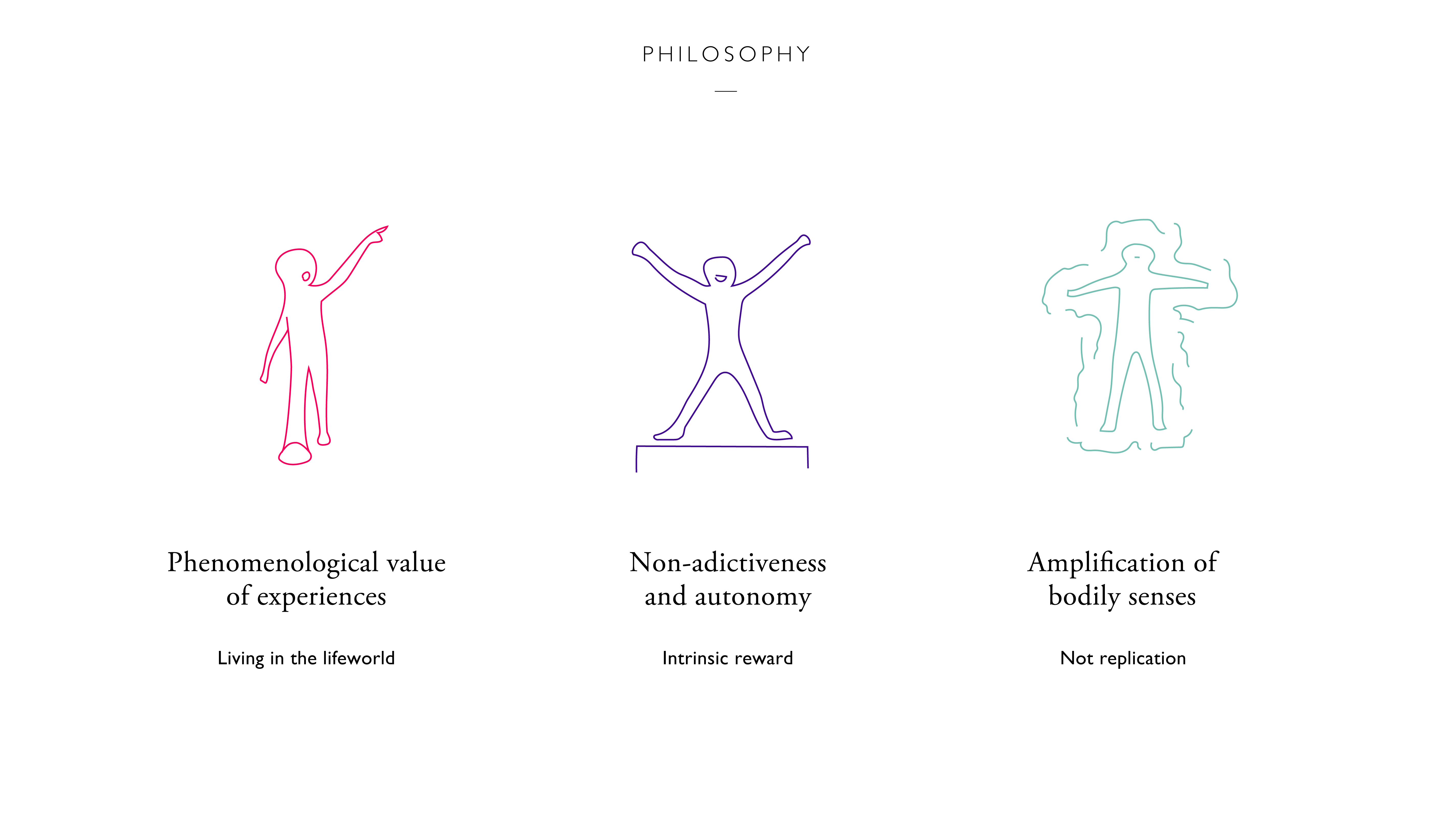

PHILOSOPHY

We began by developing a philosophical foundation for our project. We placed importance on phenomenological values taking inspiration from Edmund Husserl’s notion of the subject in the “lifeworld”, and aimed to part with conventional addiction-based metrics of success by focusing on “intrinsic reward”. These led to defining our project scope to be amplification of the senses rather than replication, as observed in technology such as VR.

QUANTITATIVE VALIDATION

In evaluating the effectiveness of our interventions, we adopted two forms of accepted quantitative metrics from the fields of mindfulness and psychedelic therapy. They were used in addition to qualitative measures such as interviews, as a way to systematically analyse the impact of our experiments.

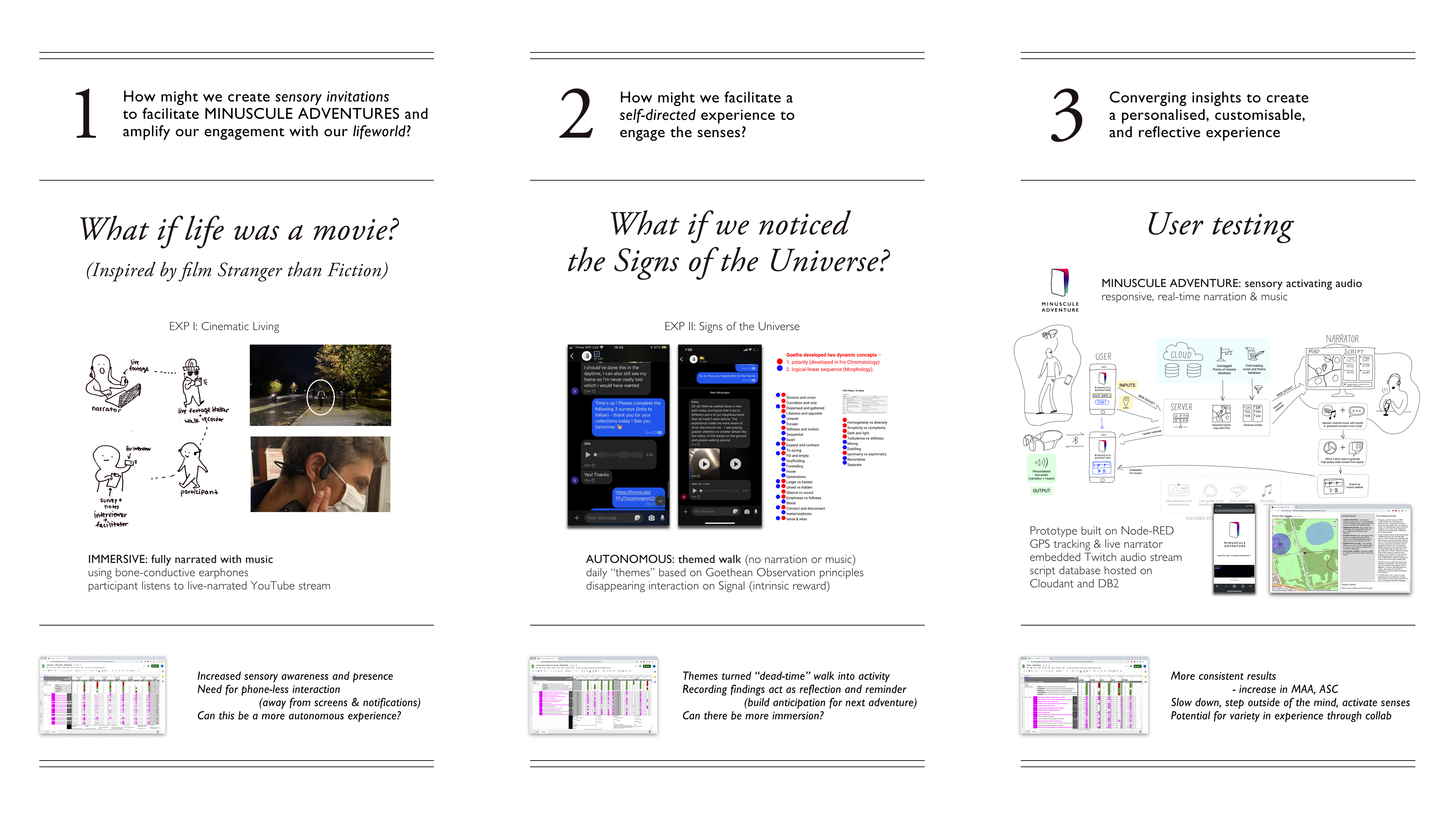

EXPERIMENTS AND INSIGHTS

The Minuscule Adventure experience was shaped by a series of experiments and insights that arose from them.

The Minuscule Adventure experience was shaped by a series of experiments and insights that arose from them.

One early-stage experiment, which we coined “cinematic living”, proved to be promising. The fully immersive experience showed the potential of a narrated walk to engage bodily senses, while also pointing to the necessity of a native hardware platform. One user’s feedback concerned with autonomy prompted us to design the “signs of the universe” experiment, in which Goethean Observation principles were applied to facilitate themed walks. Users giving their feedback on the experience as voice recordings turned out to be an effective way to reflect and build anticipation for the next walk. Combining concepts from both experiments, we conducted our user tests with a prototype developed using Node-RED to generate scripts based on the user’s GPS location. Upon starting the experience, a narrator would read from the scripts and control the music, which would be fed back to the user via an embedded Twitch audio live-stream.